Large language models (LLMs) are becoming more and more useful, from booking appointments to summarizing large volumes of text. Some LLM-based agents can interact with external applications, such as calendars or airline booking apps, introducing privacy and security risks.

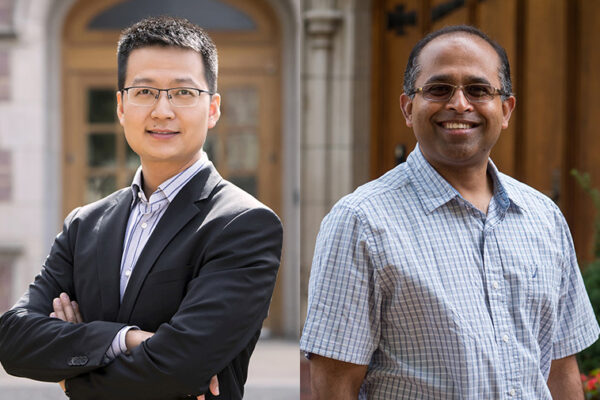

To mitigate this risk, Umar Iqbal, an assistant professor of computer science and engineering in the McKelvey School of Engineering at Washington University in St. Louis, and Yuhao Wu, a doctoral student in Iqbal’s lab, have developed IsolateGPT, a method that keeps the external tools isolated from each other while still running in the system, allowing users to get the apps’ benefits without the risk of exposing user data. Other collaborators include Ning Zhang, an associate professor of computer science and engineering at WashU; and Franziska Roesner and Tadayoshi Kohno, both computer scientists at the University of Washington.

The researchers presented their work at the Network and Distributed System Security Symposium, held Feb. 24-28.

“These systems are very powerful and can do a lot of things on a user’s behalf, but users currently cannot trust them because they are simply unreliable,” Iqbal said. “We know that there’s a lot of benefit in having these tools interact with each other, so we define the interfaces that allow them to precisely interface with each other and provide the user with the information to know that the interfacing origin comes from a trustworthy component.”

Read more on the McKelvey Engineering website.