“I was a pretty early adopter,” Kory Bieg says with a smile. “Midjourney opened for beta testing almost a year ago.”

Bieg, AIA, a 1999 alumnus of WashU’s Sam Fox School of Design & Visual Arts, is discussing the state of artificial intelligence. Though roots can be traced back decades, quickening development and a new generation of apps and tools in recent months have brought AI to ever greater public consciousness.

In April, Bieg and award-winning videogame designer Ian Bogost — who happen to be childhood friends — were among the featured speakers at “AI + Design,” a mini-symposium organized by the Sam Fox School and the McKelvey School of Engineering. The event aimed to sort through the hype and sensationalism, of both utopian and apocalyptic varieties, and grapple with AI’s actual capabilities. Bieg and Bogost led the session “How to Create with Creative AI.”

“I think some of us have gotten the sense that we’re all going to be like George Jetson, pushing buttons. But that’s not how this feels at all.”

Ian Bogost, the Barbara and David Thomas Distinguished Professor

“I think some of us have gotten the sense that we’re all going to be like George Jetson, pushing buttons,” quips Bogost, the Barbara and David Thomas Distinguished Professor and director of film and media studies in Arts & Sciences, who also serves as a professor of computer science and engineering at McKelvey Engineering. “But that’s not how this feels at all.”

Bieg, an associate professor and program director for architecture at the University of Texas at Austin, has followed development of commercial text-to-image generators such as DALL-E, Stable Diffusion and Midjourney. But he credits the latter, which launched in spring 2022, with a particular affinity for architectural modeling.

Bieg, also founder of the research office OTA+, describes one of his own projects, “Housing Blocks,” which began with the prompt “camouflage.” Initial outputs, he ruefully recalls, featured tanks and artillery. But as he continued refining his prompts and sifting through the resulting images, things grew richer and less predictable.

“After four or five thousand iterations, the green camouflage started to become plants,” Bieg says. White-and-tan patterns slowly transformed into building materials like limestone and glass.

“The architecture evolved through the curation.”

General truths

“The AI juggernaut has been building for the last 10 years,” says Krishna Bharat, the symposium’s keynote speaker. “And it’s only just beginning.”

Bharat is a distinguished research scientist at Google and father of recent WashU architecture graduate Meera Bharat. He and his wife, Kavita, recently announced a gift to create the Kavita and Krishna Bharat Professorship, a joint appointment between the Sam Fox School and McKelvey Engineering.

“The Bharat Professorship will allow us to recruit a faculty member specializing in artificial intelligence who is also deeply invested in advancing architecture, art and design, preparing Sam Fox School students to address advanced technologies in their fields,” says Carmon Colangelo, the Sam Fox School’s Ralph J. Nagel Dean. “I am grateful for the enormous generosity and vision of Krishna and Kavita.”

In his symposium remarks, Bharat points out that contemporary “deep learning” AIs are built on artificial neural networks that in many ways mimic the human brain. “The way we train such a network is by giving it billions of examples,” he explains. “Every time it guesses correctly, it gets a reward. Every time it guesses wrong, we tell it the right answer. It’s a bit like how we learn from experience.”

Earlier machine learning programs were more akin to an autocomplete function. Start typing “Washington University…,” and a search engine could easily compute, based on past queries, what percentage of users are looking for “Washington University in St. Louis.” (The figure, Bharat deadpans, is around 90%.)

What’s missing, from the perspective of creative professionals, is the sense of fine control. Listing prompts to create an AI image may seem quick and simple, but creating the right AI image, or a useful AI image, still requires human judgment.

Diffusion models, of which most contemporary image generators are a form, were first theorized in 2015 (at least in the context of machine learning; the phrase is borrowed from thermodynamics). Essentially, they work by systematically degrading an image, analyzing the data distribution and then reversing that process. Repeat this enough times, with large enough datasets, and the network learns to generate new images.

In other words, the AI is not simply memorizing. “It’s learning general truths,” Bharat says. “If that seems like magic, trust me, most computer scientists would agree.”

What’s missing, from the perspective of creative professionals, is the sense of fine control. Listing prompts to create an AI image may seem quick and simple, but creating the right AI image, or a useful AI image, still requires human judgment. Bharat gives the example of a sophisticated medical illustration. “Every aspect has meaning,” he observes. Size, colors, labeling — all convey critical information. Similarly, a building diagram must navigate a series of complex constraints, including cultural norms, aesthetic compatibilities, client wishes, construction codes and engineering best practices.

“That’s a huge amount of dependency to be encoded,” Bharat says. “Diffusion models don’t know how to do this.”

Break it a little

A few weeks after the symposium, Jonathan Hanahan, who led the panel “Human–AI Interaction: Designing the Interface Between Human and Artificial Intelligence,” is still pondering the relationship between technology and creative practice.

“Throughout history, when disruptive new technologies come into play, there’s a lot of fear about what’s going to happen. And what often happens is that we create new practices.”

Jonathan Hanahan, associate professor of design

“Throughout history, when disruptive new technologies come into play, there’s a lot of fear about what’s going to happen,” says Hanahan, associate professor of design. “And what often happens is that we create new practices.”

Hanahan is co-founder — with Heather Corcoran, the Halsey C. Ives Professor of Art, and Caitlin Kelleher, associate professor of computer science and engineering — of WashU’s Human-Computer Interaction (HCI) minor. He is also, in the compressed timeline of AI image generators, something of a veteran. He recalls taking a workshop, circa 2017, that focused on GANs, or generative adversarial networks, a sort of predecessor to current diffusion models.

“There was no commercialized software. We were writing code directly in the macOS terminal, which is always intimidating,” Hanahan says. As a designer, “I’m conversational in code but not fluent. I don’t write code as language so much as compile it as material, hacking together existing scripts.”

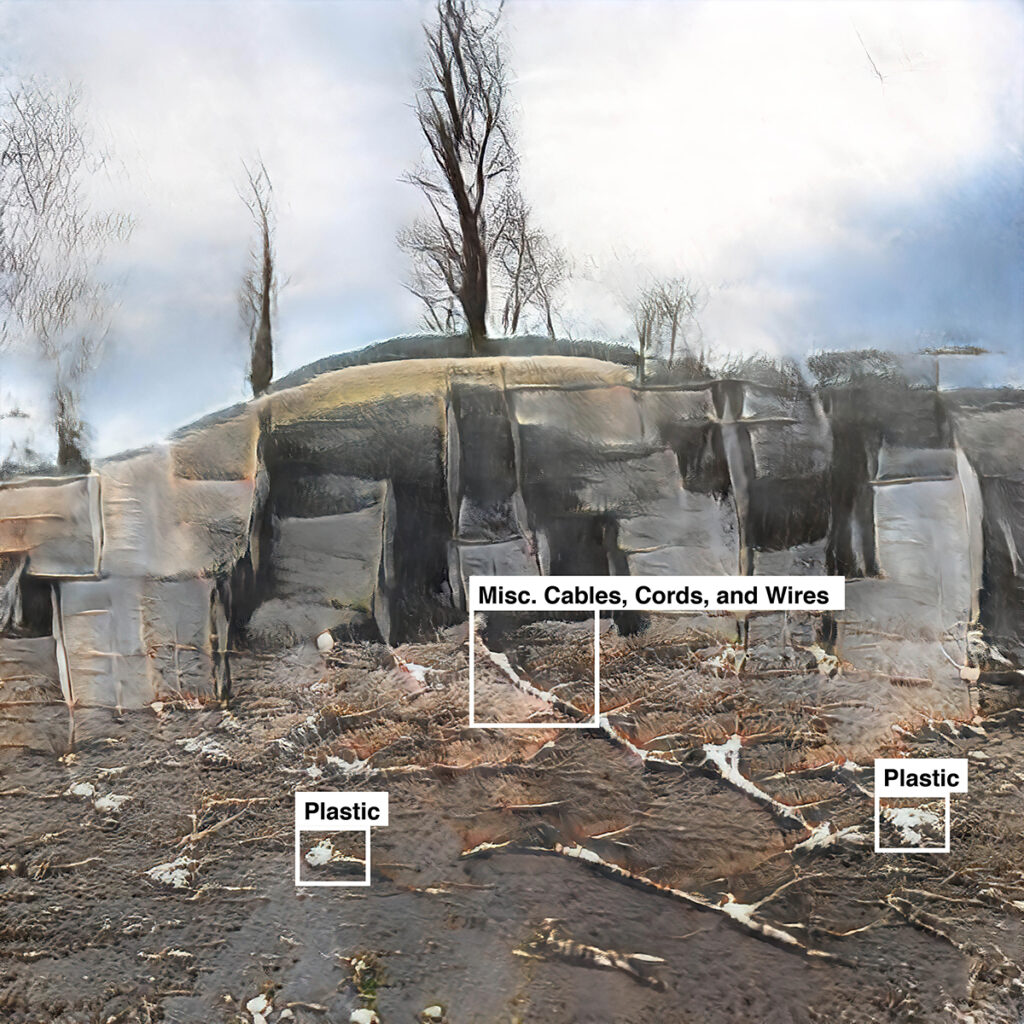

But by 2018, software with graphic user interfaces, such as RunwayML, were being released, allowing users not only to access machine learning models but to train their own. Hanahan, deploying Runway, began his ongoing “Edgelands” series, which explores the proliferation of technological waste. At the project’s heart is a custom AI that Hanahan trained on two discreet datasets: photos of St. Louis landscapes and images of illegal e-waste dumpsites across Africa, Asia and India. He then “crossbred” the datasets to create a third group of images, at once pastoral and haunting, depicting the infiltration of electronic debris into the natural world.

“If engineering strives to build perfect functional tools, maybe the role of art and design is to criticize and challenge, even break them a little,” Hanahan muses. “What’s this thing supposed to do? Can I do it differently or in a different order? Can I do something unexpected?

“Art and design have been doing this for decades with the Adobe Creative Suite,” Hanahan adds. “Now we have new tools to challenge.”

The element of surprise

Constance Vale, associate professor and chair of undergraduate architecture, is already seeing that process in action. Over the last few semesters, she’s observed how

text-to-image generators have made their way into studio practice.

“It’s mostly used for explorative studies,” Vale says. “Students are going into Midjourney or DALL-E the same way that they go into their sketchbook or a quick study model. It’s a way to see what you can come up with.”

AI models, even those employing language prompts, are still fundamentally premised on statistical relationships, which can yield unpredictable results. And yet that unpredictability sometimes can be useful. “What’s most entrancing is the element of surprise.”

Constance Vale, associate professor and chair of undergraduate architecture

Vale, who organized “The Machinic Muse: AI & Creativity” session during the “AI & Design” symposium, is co-author of the forthcoming book Digital Decoys: An Index of Architectural Deceptions, about the role of image-making in contemporary architecture. She notes that though larger cultural conversations often highlight AI’s imitative capacity — riffs on famous paintings are a marketing staple — artists and designers are drawn to the technology’s weirdness and unpredictability.

“As humans, we tend to repeat certain things,” Vale says. “Our linguistic way of thinking limits us.” AI models, even those employing language prompts, are still fundamentally premised on statistical relationships, which can yield unpredictable results. And yet that unpredictability sometimes can be useful. “What’s most entrancing is the element of surprise.”

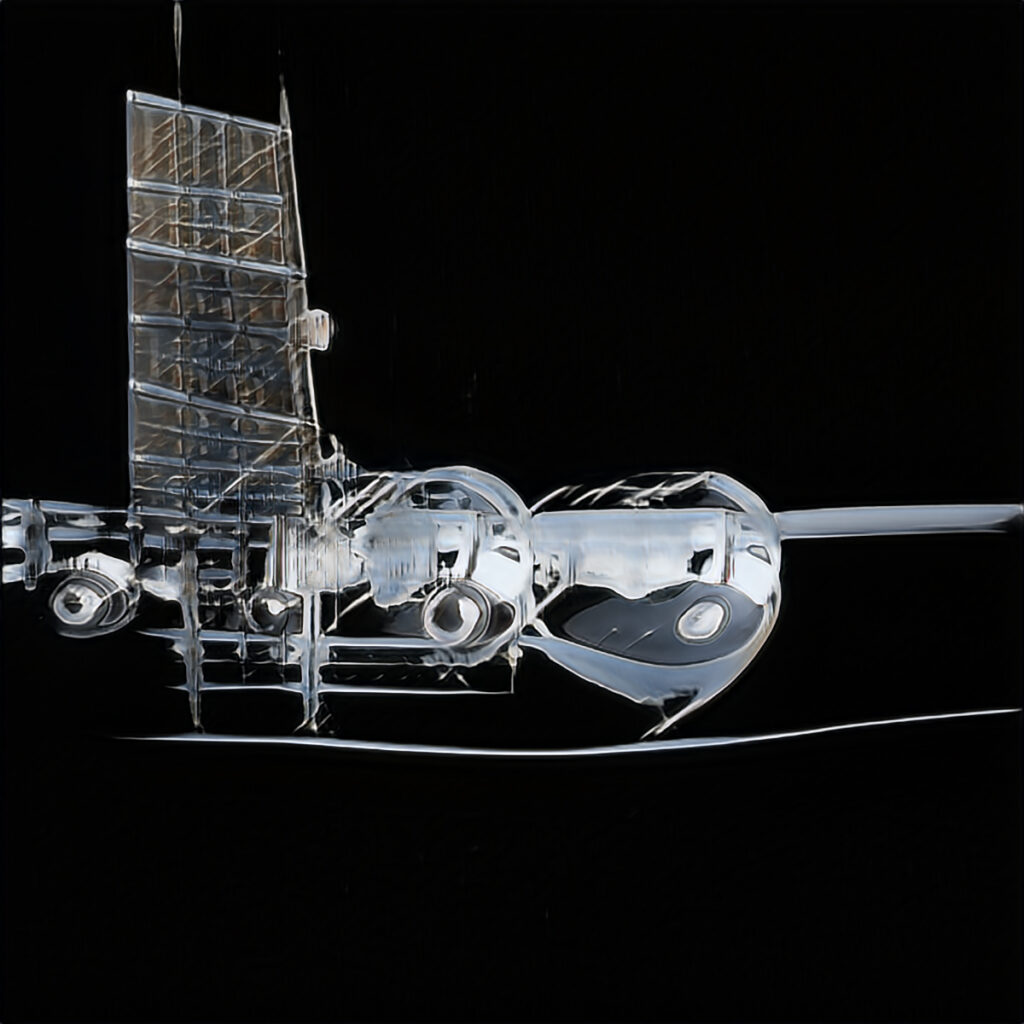

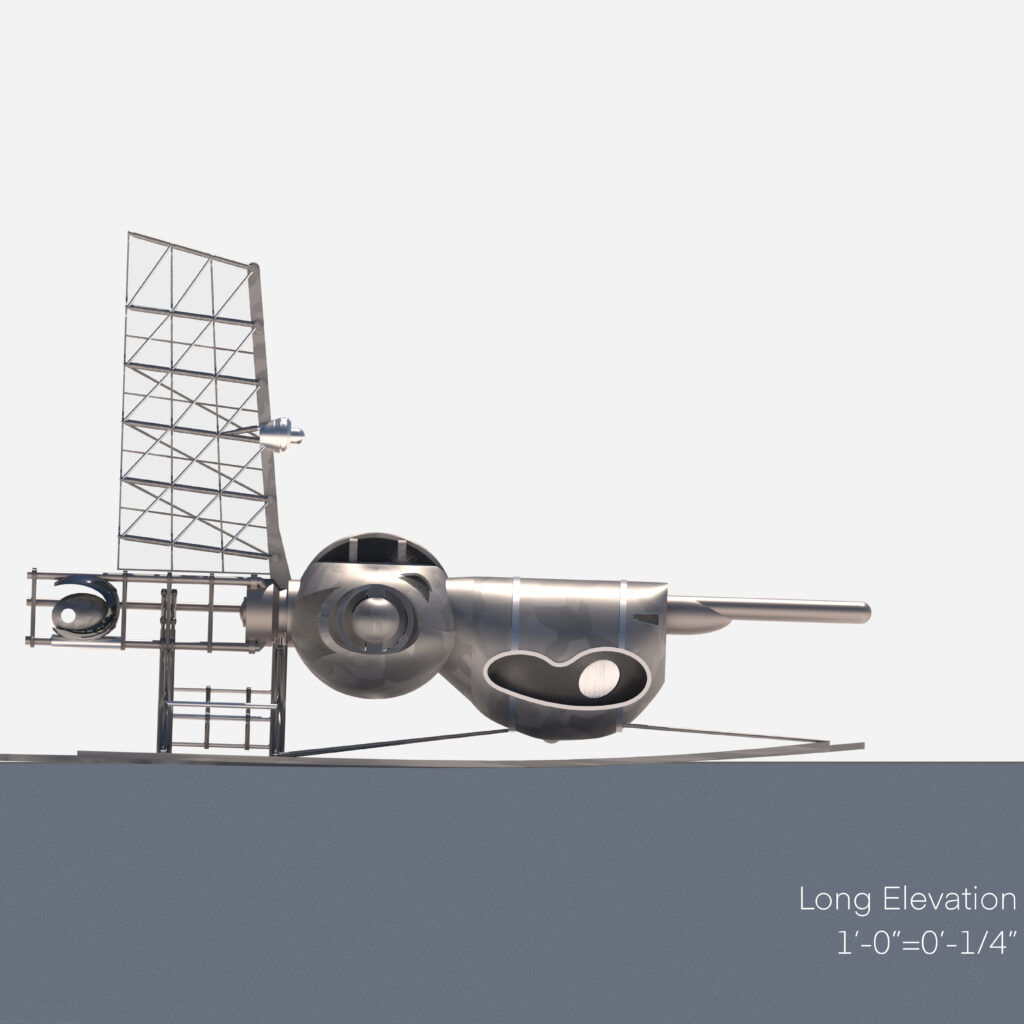

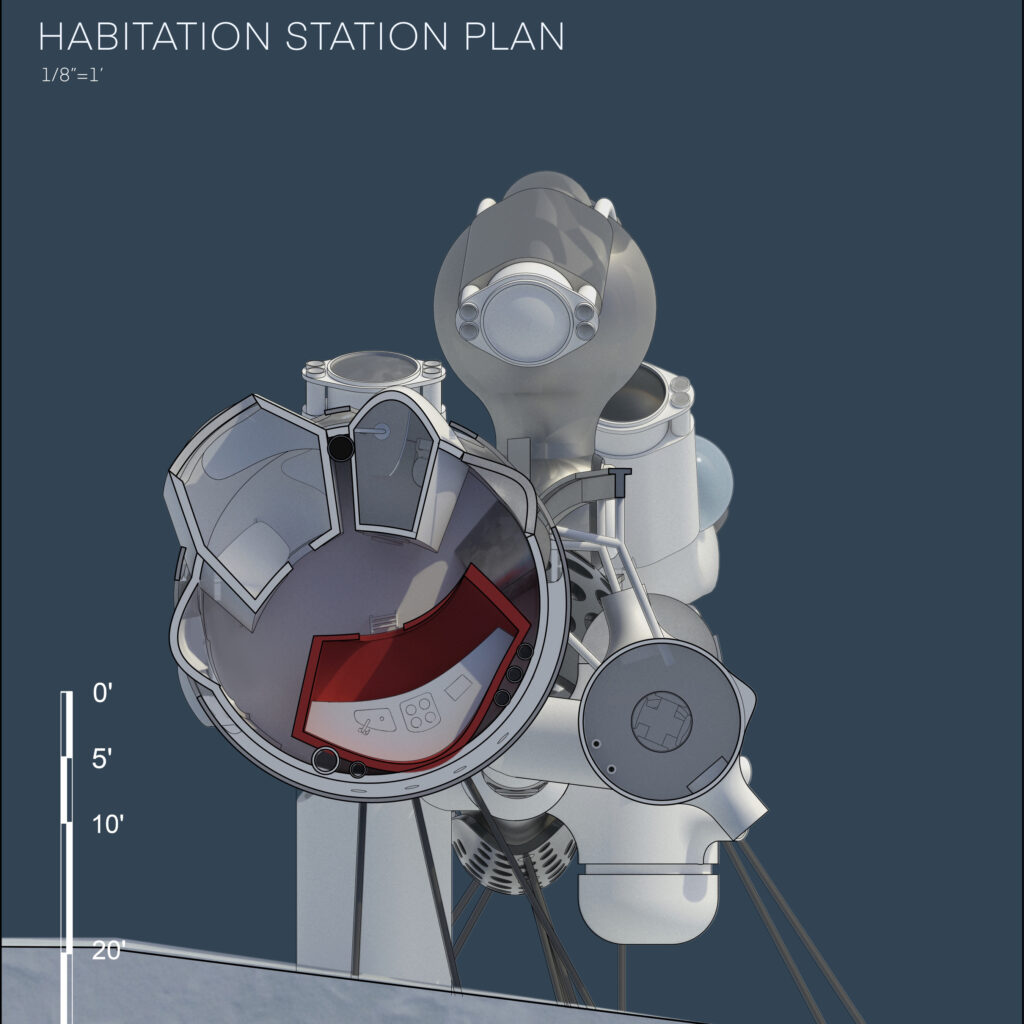

Vale points to a hypothetical Antarctic field station created last spring by current seniors Kaiwen Wang and Wayne Li, as part of Visiting Professor Karel Klein’s studio “What Machines Remember.” To begin, Wang and Li gathered a dataset of some 1,200 mechanical-themed images. Next, they used StyleGAN, running on Google Colab, to train a custom AI, cycling their results through about 200 iterations.

One particular output caught their attention. It showed a series of amorphous silver shapes vaguely suggesting skis, sails, solar panels and bulbous, mid-century aerospace design. Intrigued, they manually rebuilt and then continued refining those shapes with 3D modeling software Rhino and V-Ray. Along the way, they also added an organic element by expanding their AI dataset with images of diatoms — a photosynthetic algae enclosed in porous silica microshells — and used Photoshop and even hand-drawing to puzzle out specific elements.

The final proposal is at once elegant and uncanny, a mobile lab that hangs from a 300-foot ice sheet like a twisting mechanical vine.

“It’s never a one-click thing,” Wang says. “AI doesn’t understand what it’s producing. Something might look cool, but AI can’t speak for the design.” It takes a human designer to analyze an image and “attach meaning to it.”

The next experiment

“AI was inspired by neuroscience,” Ralf Wessel says. “But right now, neuroscience is going through its own revolution.”

Wessel, professor of physics in Arts & Sciences, holds a joint appointment in the Department of Neuroscience in the School of Medicine. He is also one of three faculty leads — with Likai Chen, assistant professor of mathematics and statistics, and Keith Hengen, assistant professor of biology — for the new teaching and research cluster “Toward a Synergy Between Artificial Intelligence and Neuroscience.”

Funded as part of Arts & Sciences’ Incubator for Transdisciplinary Futures, the cluster aims to explore whether recent AI breakthroughs might shed new light on the nature of biological intelligence.

“AI is driven by data,” Wessel says. “When I started in neuroscience, we could attach one electrode to the brain at a time; today, we can attach thousands. This gives neuroscientists a huge amount of data — and allows us to compare activity inside the brain with what’s happening inside AI models.”

Of course, analogies only go so far. “Things like ChatGPT and DALL-E aren’t really thinking in the classical sense,” Wessel muses. “There’s no underlying biochemistry. They just find statistical relationships.”

Yet neuroscientists and computer scientists do share a common goal, in that both are seeking to understand the inner workings of immensely complex systems. And to the degree that we can study how those systems go about accomplishing similar tasks, Wessel adds, it may be possible to uncover common principles. “So why not work together?”

Recently, Wessel and physics graduate student Zeyuan Ye compared how a mouse and an AI trained on nature documentaries processed identical visual stimuli. “If you have a model that’s really good at something, and the brain is really good at the same thing, then as a researcher, you want to understand how they operate,” Wessel says.

Perhaps the resulting insights “can help us design the next experiment.”

Reading problems

Back in Weil Hall at “AI + Design,” Bieg relates an experiment of his own. Using the open-source platform Hugging Face, Bieg attempted to reverse-engineer the text-to-image process by uploading one of his finished renderings and asking the AI to suggest prompts that might have created it.

The results were instructive. Some suggestions — such as “interconnected” or “crystals” — seemed straightforwardly descriptive. Others were mysterious: “Selena Gomez.” “Texas Revolution.” “Dark vials.”

“Really strange terms,” Bieg recalls. “I thought, ‘OK, that’s how the computer reads my work. That’s not how I would read it. That’s not how any human would read the work.’” But sure enough, when Bieg fed those terms back into an image generator, they produced images “very similar to the original finished rendering.”

“A lot of human learning is tied to examples. But the kinds of examples our human minds produce, and the kinds of examples that a system produces, may be very different. So can we learn to navigate in a space of crazy examples?

Caitlin Kelleher, associate professor of computer science and engineering

Caitlin Kelleher, HCI co-founder and participant on the “Human-AI Interaction” panel, notes that, among other things, text-to-image generators “really center examples as a core mechanic.”

“In some ways, that’s great,” Kelleher says. “A lot of human learning is tied to examples. But the kinds of examples our human minds produce, and the kinds of examples that a system produces, may be very different. So can we learn to navigate in a space of crazy examples?

“There’s also an issue of overload,” Kelleher adds. “If a restaurant has 50 billion items on its menu, how do you select the right thing?”

Chandler Ahrens, associate professor in the Sam Fox School and co-founder of Open Source Architecture, agrees that the speed and volume of generative AI present particular challenges.

“AI produces text really quickly,” says Ahrens, a contributor on “The Machinic Muse” panel. “In producing text, it also produces hallucinations that can seem real but that may be wrong.” This, he continues, “requires you as the reader to decipher when things aren’t right or don’t really make sense.”

In other words, Ahrens adds, quoting the futurist Peter J. Scott, “AI turns a writing problem into a reading problem.”

That dynamic, far from devaluing human judgment, highlights its centrality. “This is a very important aspect to think about when teaching,” Ahrens says. “AI is not replacing our expertise — it’s enhancing our abilities.”

The limits of language

“I don’t believe that AI is going to replace creativity,” says Alvitta Ottley, assistant professor of computer science and engineering, who teaches in the HCI minor and participated on the “Human-AI Interaction” panel. After all, she quips, the invention of the calculator didn’t eliminate the need for mathematicians. “But it will allow us to create different things.”

“I don’t believe that AI is going to replace creativity. But it will allow us to create different things.”

Alvitta Ottley, assistant professor of computer science and engineering

Ottley suspects that AI will speed the pathway from ideation to iteration. But she worries about how AI might reflect, and indeed perpetuate, hidden biases — especially as algorithms get updated with new datasets that are themselves produced by algorithm.

Kelleher, too, grapples with the issue of trustworthiness. She warns of AI-generated papers that have cited nonexistent results. “We’re building something that relies on human attention to police accuracy,” Kelleher says. That attention “can be pretty scarce.”

And yet, Kelleher adds, too-rigorous controls carry their own risks. Rather than attempting to encode some definitive notion of truth, she says, AI platforms will be better served by allowing space for “a diversity of visions.”

In his concluding remarks, Aaron Bobick, dean of the McKelvey School of Engineering and the James M. McKelvey Professor, returned to the question of how AI tools might impact curricula.

“Our goal is to produce the people who can do the best work,” Bobick says. “So how do we think about changing our teaching? How do we leverage these new tools, capabilities, procedures and possibilities?”

Bobick notes that while large-scale, language-based statistical models may make computational tools more accessible than ever to people without traditional training in coding, a solid foundation in computation and algorithmic development, for engineers and creatives alike, will make such tools “all the more powerful.”

Indeed, Bobick predicts that as AI technology continues to improve, such models will begin to push against the boundaries of what language can concisely describe.

“And that’s actually OK,” Bobick says. “We can’t let the limits of language restrict the spaces that we explore.”